Google Launches New Tool To Fight Toxic Trolls In Online Comments Image courtesy of Google

“Don’t read the comments” is perhaps the most ancient and venerable of all internet-era axioms. Left untended, or even partially tended, internet comments have a way of racing straight to the bottom of the vile, toxic, nasty barrel of human hatred. But now, Google says it’s basically training a robot how to filter those for you, so human readers and moderators can catch a break.

Many strategies have been tried for filtering out toxic comments, over the years. Countless sites and systems employ some kind of blacklist-style keyword filter — but those aren’t enough, not by a long shot. All of us have seen comments that are, for example, horribly sexist or racist that never use a single “forbidden” slur.

This is why many platforms still have to rely on human moderators to trudge through the sludge. Though Google’s not alone in looking to machine-learning AI in order to filter content for humans; Facebook, too, has been building out the bots in recent years.

Google’s new tool is called Perspective. The idea is that it applies a “toxicity” filter to comments.

Google’s definition for “toxic” is, “a rude, disrespectful, or unreasonable comment that is likely to make you leave a discussion,” and it trained its bot to recognize toxic material by first gathering data from humans. People were asked to rate real comments on a scale from “Very toxic” to “very healthy,” and the machine learns from there.

By screening with that filter, Perspective determines how similar a given comment is to other comments that humans have marked as either toxic or not.

The site has a tool showing you how comments rate on a toxicity scale on three particular highly contentious topics: climate change, Brexit, and the 2016 U.S. election. Under climate change, for example, the comment, “How can you be so stupid?” rates as toxic; the comment, “Climate changes naturally,” on the other hand, is considered non-toxic.

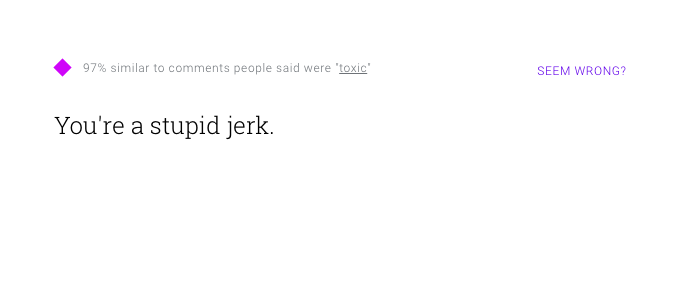

You can also try it live in a text-entry box on the site. For example, the comment, “You’re a stupid jerk” rates as highly likely to be toxic, as do more profane variations on the same general thought.

The text-entry feature cautions that you may disagree with Google’s toxicity assessment of the content you typed, and adds that if you do, “Please let us know! It’s still early days and we will get a lot of things wrong.”

That, in turn, points to one of the big challenges with a machine learning algorithm like this: Its learning can only ever be as good as its input. For this to be successful, it will need to gather a wide, genuinely diverse array of users leaving a wide, genuinely diverse array of feedback. The folks who regularly sling online vitriol against people who don’t look or think like them probably have a different threshold for toxicity than the folks on the receiving end, after all.

And indeed, a Washington Post writer tried the filter against some deeply unsavory terms, and found that while one well-known racial slur rated as 82% toxic, other well-known slurs rated as low as 32% toxic.

It’s not surprising that large companies want to find people-free solutions to the toxicity problem. For one thing, paying a workforce gets expensive, very quickly — even if they’re workers in nations with lower minimum and average wages than the U.S.

For another, content moderation is extremely hard on the people who do it, as both Wired and The Verge have investigated. The folks who do it at any kind of volume burn out after reviewing endless tides of hate speech and violent, nightmare-fuel images.

Google’s initial corporate partners for developing Perspectives include Wikipedia, The Economist, The New York Times, and The Guardian, all of which in some way want to attract and retain high-quality discussion or debate while minimizing or at least reducing abuse and attacks against participants.

Want more consumer news? Visit our parent organization, Consumer Reports, for the latest on scams, recalls, and other consumer issues.